Note: this Analyst Report on ControlTheory was prepared by Breakthrough Moments and posted here for your research and review.

Introduction: The Observability Crisis

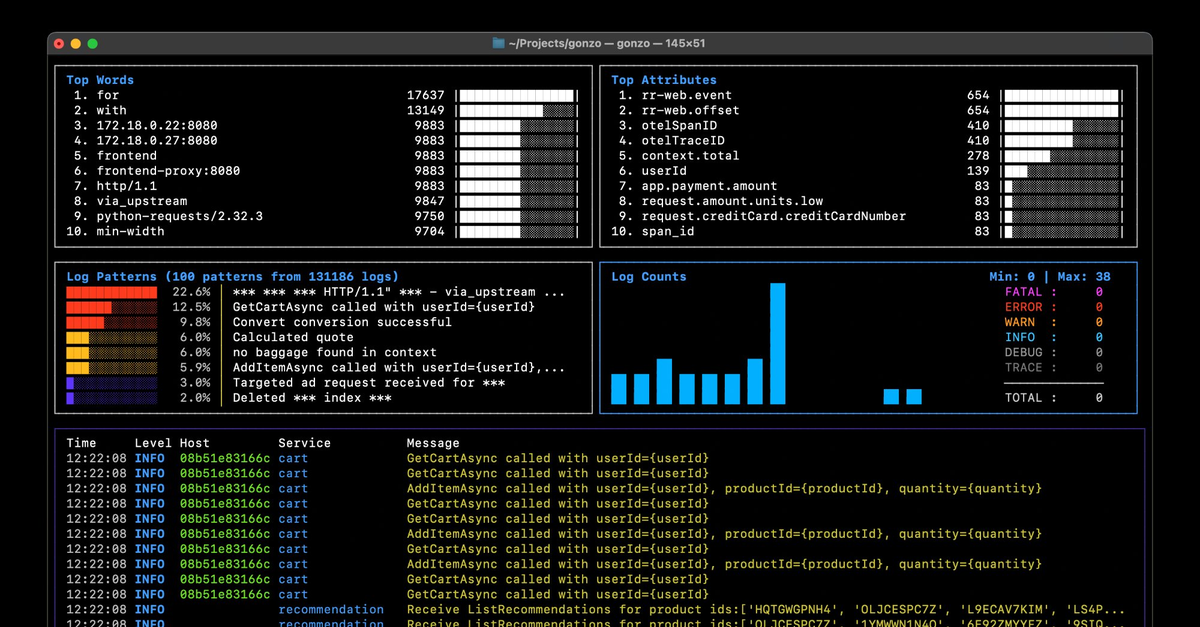

At 3:17 AM, the fluorescent hum of the operations center was a cruel counterpoint to the crimson tide of alerts washing over Sarah’s dashboard wall. As a seasoned Site Reliability Engineer, she knew this feeling well: a critical customer-facing application was bleeding revenue, but the root cause was buried somewhere in the sprawling, interconnected microservices. Logs, metrics, traces—her MELT data—was meant to clarify, but instead, it was a tsunami of noise, drowning her team in millions of entries a minute.

High-cardinality custom metrics moved like molasses, modern OpenTelemetry agents swallowed crucial traces whole, and despite having 15+ tools, no one could definitively say what had broken, or why. Root cause analysis wasn’t just difficult; it was impossible. Mean Time To Repair (MTTR)? A nightmare stretching into hours, then days. This wasn’t an anomaly; it was the brutal, daily reality of an unoptimized observability landscape.

In the following sections, you’ll discover how this “observability crisis” is not merely a tooling problem, but a deep architectural flaw, and why the rise of intelligent software demands an entirely new approach. We’ll delve into the innovative concept of “controllability” and uncover how it transforms passive data collection into active, intelligent command, leading to dramatic cost reductions, enhanced operational efficiency, and a future-proofed telemetry strategy.

Architectural Fragility Beneath the Surface

This isn’t just a tooling problem—it’s an architectural one. Most telemetry systems today are dumb pipes feeding fat lakes. Every event, metric, and trace is shoveled into massive cloud storage with little filtering, correlation, or intent.

These systems are engineered to collect everything, but understand nothing—like security cameras recording every hallway in a building, yet lacking the intelligence to recognize a break-in or even replay the right footage when it matters. The result is:

- Soaring ingestion and storage costs

- Noise overwhelming signal

- Unmanageable data volumes

- Telemetry systems blind to their own health

Meanwhile, technical debt accumulates quietly but dangerously:

- Hardcoded fixes become permanent

- Outdated dependencies break silently

- Testing is skipped under pressure

- Documentation gaps stall RCA

Even promising frameworks like OpenTelemetry become dangerous when misconfigured. Auto-instrumentation and poor staging practices lead to:

- Silent trace loss

- Resource overuse degrading app performance

- Exposed PII and security gaps

- No visibility into agent health

Without a fundamentally smarter architecture—one that favors signal over scale—today’s observability stack simply cannot keep up with system complexity.

A Future of Intelligent Systems Demands Intelligent Observability

This would be bad enough if systems were standing still. But they’re not.

The age of intelligent software is here. AI copilots, autonomous agents, and self-adaptive infrastructure are rapidly reshaping the operational landscape. These systems don’t just require observability—they generate new telemetry demands:

- Fine-grained state tracking across agent decisions

- LLM prompts, outputs, and chain-of-thought telemetry

- Real-time feedback loops requiring low-latency signal processing

- Multi-modal telemetry blending structured and unstructured data

This will produce a new observability explosion, dwarfing today’s data volumes. And dumb pipes won’t survive it.

To operate intelligent systems at scale, observability must evolve:

- From passive collection to active interpretation

- From fat lakes to context-aware, queryable fabrics

- From black-box pipelines to self-aware telemetry flows

The next generation of observability must be:

- Composable: Built from modular, API-first components

- Self-reflective: Capable of monitoring itself in real time

- Policy-aware: Enforcing telemetry governance and cost controls

- AI-augmented: Using machine learning to separate signal from noise

Reclaiming Control: Engineering for the Future

The future of observability is not more data. It’s a better design.

We must stop treating observability as an afterthought or a tooling arms race. Instead, we need to engineer for it. That means designing systems with observability as a first-class concern:

- Instrumentation must be intentional and auditable

- Telemetry must be structured, filtered, and contextualized at the source

- Pipelines must be resilient, observable, and introspective

Done right, observability becomes a strategic capability—a control plane for understanding, governing, and evolving complex systems in real time.

The age of intelligent software demands nothing less.

Coming Next: From MELT to Meaning: In the next chapter, we’ll explore how traditional MELT (Metrics, Events, Logs, Traces) frameworks are being redefined in this new era—and what it will take to design observability that sees not just what is happening, but why.

ControlTheory: From Observing Chaos to Commanding Clarity

Amidst this landscape of escalating costs, operational frustration, and diminishing returns, ControlTheory emerges not with an incremental improvement, but with a fundamental paradigm shift. It introduces the critical concept of “controllability” , a powerful complement counterpart to observability, rooted in the rigorous mathematical discipline of control theory. While observability measures how well internal system states can be inferred from external outputs, controllability is the property that enables a system to be directed towards a desired state through deliberate inputs and feedback.

ControlTheory’s founders, drawing on deep expertise in Mathematical Science and Electrical Engineering, recognized that the static, passive nature of traditional telemetry pipelines was the root cause of the industry’s pain. They saw pipelines acting like “one-way valves with no feedback controls,” incapable of dynamic adjustment or intelligent interaction.

ControlTheory’s core innovation applies the adaptive feedback loop principles commonly used in stabilizing aircraft, regulating power grids, and optimizing complex industrial systems to the observability domain. By continuously measuring outputs, adjusting inputs, and leveraging these feedback loops, the system dynamically optimizes performance to maintain a desired state.

Negative feedback corrects errors and maintains stability, while positive feedback can drive rapid scaling. ControlTheory posits that by embedding these loops within telemetry pipelines, organizations can actively “control our telemetry to both save costs AND enable much needed insights.” This transforms observability from a passive activity of seeing what is happening into an active process of influencing and optimizing system behavior itself.

The result is a radical departure from brittle, static data collection pipelines: Elastic Telemetry Pipelines. These pipelines dynamically auto-scale observability and proactively adapt to change through real-time feedback. Instead of dumping all data indiscriminately to a massive data lake, they intelligently manage the flow based on current conditions, costs, and the critical need for insight. Crucially, ControlTheory’s solution is agnostic to your existing tools, integrating seamlessly with over 100 different data sources and destinations, from proprietary agents to cloud-native services and legacy systems.

It moves beyond viewing OpenTelemetry merely as a data format or collection agent, recognizing it instead as a foundational platform that enables a higher layer of intelligence and control. ControlTheory’s solution “sits as a control plane on top of the OpenTelemetry Collector,” leveraging its inherent flexibility to orchestrate telemetry with unprecedented sophistication. This vision positions the future of advanced observability (controllability) as deeply intertwined with the intelligent utilization of open standards like OTel, fostering innovation above the collection layer rather than within proprietary silos.

The Three Pillars of Command: Cost, Operations, and Adaptability

ControlTheory’s transformative power rests on three interconnected pillars, delivered through its intelligent control plane operating seamlessly atop the OpenTelemetry Collector, ensuring compatibility with any existing telemetry source or destination.

Intelligent Cost Management: Slashing Waste, Unlocking Value

The financial hemorrhage caused by unmanaged telemetry is a primary target. ControlTheory proactively prevents unexpected bill surprises by enabling organizations to pinpoint cost spikes and hotspots before they impact the bottom line. Its most potent weapon is custom metric cardinality reduction. By automatically detecting high cardinality conditions, identifying the problematic variables causing cost explosions, and deploying intelligent filters, ControlTheory achieves substantial cost reductions, typically in the 20-30% range—on what is often the single largest line item in an observability budget.

Beyond metrics, the platform provides comprehensive telemetry spike detection and control. It offers granular log volume analysis, attribution (identifying who or what generated the logs), and tracking. For lower-priority applications, it can proactively suppress spikes and enforce log volume guardrails, preventing minor issues from triggering financial avalanches. Logs are automatically enriched with attribution, enabling faster root cause analysis and facilitating chargeback/showback models to allocate costs accurately, or enabling platform teams to track adherence to quotas across development/product teams and enable various enforcement mechanisms to control usage.

ControlTheory further optimizes log management through intelligent filtering, deduplication, and enrichment. It filters out lower-priority noise and eliminates costly duplicate entries, simultaneously reducing expenses and dramatically increasing the signal quality delivered to analysis tools. The system includes safeguards like reminders and circuit breakers for debug logs, ensuring they are active only when truly needed, preventing accidental floods. It also enhances the value of retained logs by enriching them with additional context or combining/aggregating them for more efficient consumption.

Smart routing and cold storage strategies complete the cost optimization picture. Infrequently accessed logs are efficiently routed to significantly less expensive “cold storage” solutions like AWS S3, with the ability to rehydrate them when necessary. Adaptive routing rules allow organizations to split traffic, sending only high-priority logs and traces to premium, expensive observability tools based on specific conditions, incidents, or schedules. Crucially, ControlTheory is fundamentally committed to vendor lock-in avoidance.

Built on an OpenTelemetry-powered platform, ControlTheory maximizes organizational flexibility, meeting both functional and cost objectives while avoiding the vendor lock-in common with proprietary observability tools. But its key differentiator lies in a deeper design philosophy: understanding the ‘why’ behind data collection is the ultimate control point. Unlike standard observability tools that passively gather data, ControlTheory empowers teams to define intent, enforce telemetry policies, and curate data pipelines in real time. This shifts telemetry from a technical liability into a high-leverage asset—enabling proactive, value-driven observability rooted in control and clarity. It acts as a meta-observability layer, observing why one observes, driving intelligent decision-making at the very source.

Enhanced Operational Efficiency: Accelerating Insights, Silencing Noise

ControlTheory directly tackles the rising MTTR and frustratingly slow root cause analysis by fundamentally improving the signal-to-noise ratio. By actively filtering, deduplicating, and enriching telemetry data in flight, it ensures that SREs, developers, and operations teams receive only the most relevant, high-fidelity information precisely when they need it. This laser focus on data quality over raw volume directly translates to faster problem identification (reduced Mean Time To Identify – MTTI) and significantly faster resolution (reduced MTTR).

The platform acts as a force multiplier for modern initiatives. It accelerates OpenTelemetry adoption and crucial AI projects by feeding these systems refined, specific, high-quality data instead of raw, noisy feeds. AI models are notoriously sensitive to input data quality; garbage in truly means garbage out. ControlTheory ensures the telemetry fueling AI-driven operations is trustworthy and relevant. It also enables tracking adherence to, and governance of, telemetry semantic (naming) conventions (e.g. OpenTelemetry Semantic Conventions) that are emerging as key enablers of clear correlatable context within and across telemetry signal types.

A key differentiator is ControlTheory’s ability to inject business context directly into telemetry pipelines. By understanding the business value of specific applications, services, or even individual transactions, the platform can dynamically prioritize, filter, and route data to ensure that critical business KPIs are always visible and actionable, rather than buried in generic noise.

Furthermore, ControlTheory provides robust auditing capabilities for observability pipelines. It can identify where telemetry data might be missing, corrupted, or misconfigured, flagging these issues proactively so remedial action can be taken before they lead to blind spots or incidents. This “observability of your observability” ensures the integrity and completeness of your insights. Managing observability at scale is simplified through robust capabilities for managing and governing OpenTelemetry collector fleets.

ControlTheory enables decentralized observability governance, empowering engineering teams to manage and delegate observability responsibilities effectively, ensuring comprehensive coverage without central bottlenecks. Furthermore, the platform significantly enhances the on-call experience. It correlates developer deployments and code commits with subsequent telemetry spikes, providing crucial context during incidents. It proactively manages logs in flight, preventing debug or info log-level configurations from accidentally flooding systems and causing secondary outages, turning potential disasters into minor blips.

Dynamic Adaptive Telemetry Pipelines: Building Resilience for the Future

ControlTheory directly addresses the inherent brittleness of static pipelines by introducing truly Elastic Telemetry Pipelines. These pipelines are not fixed conduits; they are dynamic systems that automatically scale observability coverage and proactively adapt to changes in the environment through continuous feedback loops. This enables continuous improvement and adjustment, supporting scenarios like auto-scaling instrumentation for new application releases or facilitating iterative Root Cause Analysis (RCA) processes.

For distributed tracing, a powerful but often prohibitively expensive tool, ControlTheory implements intelligent tail sampling capabilities. This ensures that only the most important traces, those likely to be crucial for diagnosing complex issues, are sent to costly observability solutions, dramatically improving RCA effectiveness while simultaneously keeping trace-related costs under control. Privacy and security compliance are not afterthoughts but foundational features.

ControlTheory enables organizations to maintain strict governance by masking and obfuscating sensitive data, such as Personally Identifiable Information (PII), before it ever leaves the pipeline bound for observability systems. This allows organizations to leverage the full power of their observability tools without compromising data integrity or regulatory compliance. It also enables them to “democratize” their (redacted) telemetry data and “put it to work” ensuring downstream teams have the data they need, when they need it, both inside and outside of engineering (product management, sales etc..) This transformation of the OpenTelemetry Collector is profound. ControlTheory elevates it from a simple, passive data forwarder into an intelligent control plane.

This redefines its role within the observability architecture from a dumb pipe to an active, decision-making node capable of real-time adjustments, filtering, and routing based on dynamic conditions and evolving business needs. This strategic shift leverages the open-source OTel standard as a powerful base upon which to build proprietary intelligence, unlocking its full potential as the central nervous system for controllable telemetry.

The Tangible Triumph: Quantifiable Results and Strategic Transformation

Implementing ControlTheory’s controllability paradigm yields concrete, measurable benefits that resonate across financial, operational, and strategic dimensions.

Cost Reduction as a Strategic Enabler:

The financial impact is immediate and substantial. Reductions of 20-30% in custom metric costs are consistently achievable through intelligent cardinality management. Optimization of data collection and processing translates into direct savings on tool capacity licenses and overall data processing expenses.

Perhaps even more significantly, the automation built into ControlTheory’s telemetry pipeline management results in a 30%+ reduction in cloud computing resources used for data ingestion, processing, and routing. This efficiency gain dramatically improves the return on compute spend. Proactive cost management eliminates bill shock by enabling costs to be attributed to specific teams, applications, or users, fostering accountability and preventing difficult financial conversations.

Critically, these savings are not merely about cutting budgets; they serve as a strategic enabler. Resources previously consumed by “firefighting” and managing pipeline chaos can be reallocated to innovation, accelerating AI adoption, funding new feature development, and driving competitive advantage. Observability shifts from a burdensome cost center to an engine for business growth. Intelligent cold storage routing further slashes high retention fees associated with keeping infrequently accessed data in expensive hot storage.

Operational Excellence Regained:

The most visceral impact for teams like Sarah’s is the dramatic acceleration in incident response. By enhancing signal quality, integrating crucial business context, and ruthlessly eliminating noise, ControlTheory delivers significant reductions in Mean Time To Identify (MTTI) and Mean Time To Resolve (MTTR). Faster, more precise root cause analysis becomes the norm, not the exception. The improved relevance and clarity of data directly translate to more informed and timely decision-making at all levels. Integrating diverse telemetry sources also provides deeper context, further refining insights and enabling superior performance optimization.

The platform empowers AI-driven predictive maintenance, with documented results in various industries showing potential for up to 35% reductions in downtime and 50% cuts in MTTR. The on-call experience for development teams is transformed from a source of dread to one of confidence, thanks to correlated context and noise suppression. Automated data quality checks with proactive alerting identify and resolve issues—including missing or corrupted data detected through pipeline auditing—before they impact critical business processes, enhancing overall organizational agility and reliability.

Future-Proofing with Open Standards and AI:

ControlTheory doesn’t just address today’s observability challenges—it equips organizations with a scalable, intelligent foundation for AI-driven operations, autonomous systems, and adaptive infrastructure. It ensures that telemetry becomes a strategic enabler, not a burden, positioning teams to lead in a future defined by complexity, velocity, and intelligent automation.

By ensuring the availability of refined, specific, high-quality telemetry, the platform dramatically accelerates the adoption and success of OpenTelemetry and critical AI projects. It acts as the essential data preparation layer, transforming raw, chaotic data into AI-ready intelligence, directly addressing a major bottleneck for effective AI deployment in IT operations (AIOps).

This positions ControlTheory not just as an optimizer, but as a crucial enabler for predictive maintenance, anomaly detection, and the journey towards autonomous operations. Its foundation on OpenTelemetry and its support for over 100 data sources/destinations maximizes freedom of choice and effectively mitigates vendor lock-in, providing long-term strategic flexibility and reducing migration costs. Built-in capabilities for masking PII and obfuscating sensitive data ensure robust governance and compliance are maintained even as data flows for analysis.

The inherent “control plane” architecture allows for the dynamic adaptation of infrastructure based on evolving business needs, ensuring long-term agility. By delivering a clean, optimized, high-signal data stream, ControlTheory creates the fertile ground necessary for “new analytics based on causality, inference, agentic systems, and other emerging AI tech to blossom, flourish and thrive.”

Ecosystem Credibility and a Collaborative Strategy

ControlTheory’s approach to market entry is deeply grounded in ecosystem credibility and strategic collaboration. The company’s advisory board includes Chris Aniszczyk, CTO of the Cloud Native Computing Foundation (CNCF), a pivotal figure in the cloud-native movement. His involvement not only signals alignment with open-source principles and CNCF’s vision, but also affirms ControlTheory’s commitment to industry standards like OpenTelemetry. This relationship lends significant technical and strategic validation.

Crucially, ControlTheory does not position itself as a disruptor in the conventional sense. Rather than aiming to displace entrenched vendors, it presents itself as an enhancer, an augmentation layer designed to improve outcomes for users of leading observability platforms. As the founders succinctly state: “We want to help DataDog customers make their environment better.”

This philosophy is baked into the architecture and go-to-market approach. ControlTheory integrates with any source, any destination, optimizing telemetry across hundreds of formats—logs, metrics, traces, profiles—before routing clean, value-rich data to downstream systems like Prometheus, Grafana, Splunk, DataDog, Dynatrace, and New Relic. This plug-in model allows organizations to preserve existing investments while significantly improving performance, cost-efficiency, and signal-to-noise ratios.

By lowering friction, avoiding rip-and-replace upheaval, and embracing standards and partnerships, ControlTheory fosters real collaboration among customers, vendors, and the broader observability community. This cooperative stance not only accelerates adoption but also positions ControlTheory as a long-term ecosystem ally in the mission to make telemetry controllable.

The Imperative for Command Of Your Future: Conclusion and Call to Action

Modern enterprises are navigating a digital environment defined by escalating complexity, relentless data growth, and rising cost pressure. Yet observability, the discipline designed to provide clarity, has become a source of confusion, sprawl, and runaway spend. Traditional telemetry pipelines and legacy SIEMs struggle to scale, lacking the adaptability to manage today’s signal volume, velocity, and variability. The result? Lengthening MTTR, vanishing ROI, and rising burnout. Observability, once a strategic promise, now risks becoming a strategic liability.

ControlTheory delivers the necessary evolution. By embedding the principles of control theory into the heart of telemetry management, it introduces a new paradigm: controllability—real-time, adaptive pipelines that self-optimize, reduce noise, manage costs, and preserve context. This isn’t passive monitoring. It’s intelligent, dynamic command over the entire observability lifecycle.

Its Elastic Telemetry Pipelines are already delivering quantifiable results:

- 20–30%+ reductions in ingest and storage costs

- 50%+ improvements in MTTR

- 30%+ cloud resource savings

- Complete visibility and financial control, eliminating bill shock

But this is about more than optimization. It’s about freeing observability to fuel innovation. AI initiatives, faster product cycles, richer customer experiences. Observability becomes a business enabler, not just an engineering expense.

The foundation is proven: a seasoned team with a decade of success, trusted by investors who’ve backed them before, and validated by cloud-native luminaries like CNCF’s CTO. The solution is ecosystem-friendly, vendor-agnostic, and immediately compatible with existing tooling. No rip-and-replace. No lock-in. Just results.

The conclusion is clear: The future of enterprise telemetry cannot be static, expensive, or passive. It must be dynamic, intelligent, and under control.

ControlTheory isn’t just managing the signal chaos of today, it’s enabling Command of the Future.

Organizations ready to transform observability from a liability into their sharpest competitive advantage should engage ControlTheory and partner with the team that has shaped multiple cloud-native inflection points, and now offers the capability to truly take command.

Table of Contents

Surface Unknown Unknowns Automatically

Catch emergent patterns from AI-generated code in staging—before they become production incidents.

Learn About Dstl8press@controltheory.com

Back

Back