Why High Cardinality Makes Datadog Costs Hard to Control

High-cardinality logs and telemetry don’t just drive up spend. They’re a signal that observability decisions are happening too late in your pipeline. Datadog high cardinality problems can be corrected without major surgery or loss of information.

This post explores why logs quietly dominate cardinality, why traditional controls fail, and how distillation reframes observability around system health. While this post uses Datadog as a concrete example, the same high-cardinality dynamics apply across modern observability stacks built on centralized log and telemetry pipelines.

What Is High Cardinality — and Why Does It Cost So Much?

In Datadog, cardinality refers to how many unique combinations of tags or attributes your telemetry data produces. The more dimensions you attach to a signal, the more unique time series, log fields, or trace attributes Datadog has to ingest, process, and store.

Consider a metric like api.request.count. On its own, it’s harmless. But tag it with env, region, user_id, and container_id, and you’ve suddenly created thousands — or even millions — of distinct time series. Each one is billed. Queries slow down. Dashboards lag. And most teams don’t notice the problem until Datadog costs spike.

Logs push this dynamic even further.

If every log line includes dynamic fields — request IDs, session tokens, payload fragments, headers — cardinality grows continuously and invisibly. Even if those fields are rarely queried, you’re still paying to ingest, process, and often index them. Over time, logs quietly become the largest and hardest-to-control source of Datadog spend.

Traces add yet another layer, capturing real-time request context across services and environments. Combined, logs, metrics, and traces create an observability pipeline where cardinality compounds faster than teams can react.

This is why high cardinality is so difficult to manage — and why traditional controls rarely feel effective.

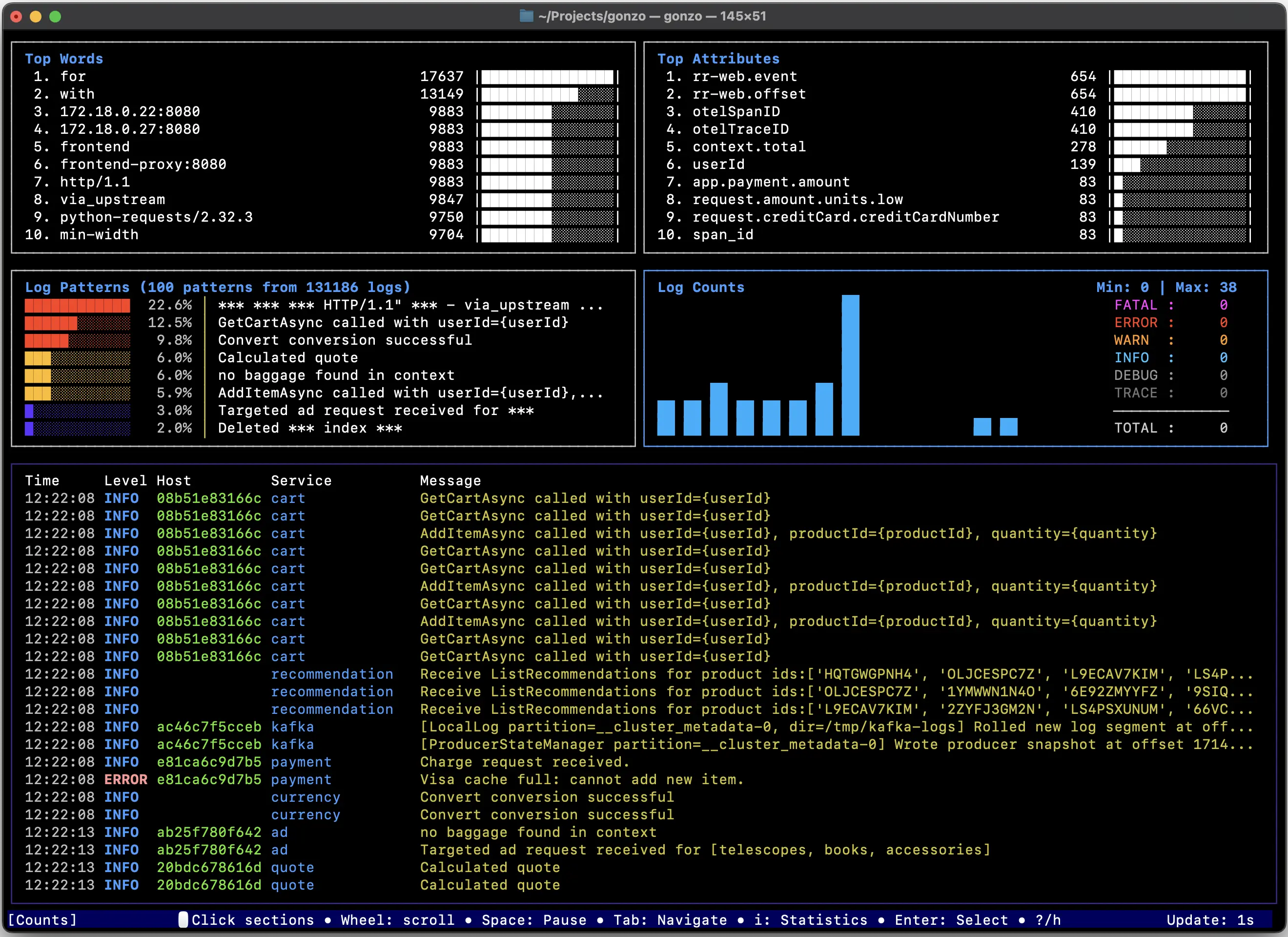

Logs: The Silent Driver of Datadog High Cardinality

Logs are the backbone of observability — but they’re also the largest and least controlled source of cardinality in Datadog.

Unlike metrics or traces, logs are:

- Unstructured

- High-volume

- Rich in dynamic fields

Every log line can introduce new dimensions such as:

- Request IDs and trace IDs

- User IDs, session IDs, and tenant IDs

- Container, pod, node, and cluster identifiers

- File paths, URLs, and query parameters

- Feature flags, experiment variants, and rollout versions

- Error messages with embedded values

At scale, this explodes fast. A single microservice emitting thousands of logs per minute, each with slightly different values can generate millions of unique field combinations per day. Once these fields are indexed or parsed, Datadog’s cardinality curve bends sharply upward, often without teams realizing it until the bill arrives.

What makes logs especially dangerous is that cardinality is accidental:

- A new deployment adds a field

- A debug statement sneaks into production

- A JSON payload includes a user-specific value

Why Traditional Log Pipelines Break Down

Most log pipelines were built on a simple assumption: collect everything first, decide what matters later.

That approach worked when systems were smaller and logs were sparse. At modern scale, it collapses under its own weight. The moment raw logs are centralized, cardinality is already baked in. From there, teams are left with a limited set of controls, all of them applied too late in the process:

- Indexing rules move the cost, not the problem

High-cardinality fields are still ingested, parsed, and processed before indexing decisions apply. You save on query flexibility, but not on ingestion complexity or surprise growth. - Sampling hides the rare events you actually care about

Incidents, regressions, and edge-case failures are statistically insignificant which makes them the first to disappear under sampling. - Exclusion filters require perfect foresight

Teams must predict which fields, services, or environments will never matter. In practice, the most expensive fields are often the ones you discover only after an incident. - Dashboards and alerts don’t reduce cardinality

They help humans cope with noise, but they don’t change the underlying shape of the data being ingested.

By the time logs reach Datadog, the system has already lost leverage. All that remains is damage control.

The Missing Layer: Distillation Before Centralization

High cardinality isn’t inevitable: it’s the result of where decisions are made in the observability pipeline. In other complex systems, raw inputs are never stored or analyzed directly. They’re distilled first — reduced, summarized, and shaped into signals that can be controlled over time.

Observability has historically skipped this step. Instead of transforming logs at the source, most pipelines forward raw events downstream and hope centralized tools can sort it out later. The result is a flood of unbounded context competing for attention, storage, and budget.

A distillation layer changes the equation:

- Reduce raw log volume before indexing and storage

- Collapse high-cardinality fields into patterns and summaries

- Detect changes, anomalies, and emerging behaviors in real time

- Preserve meaning while shedding unnecessary dimensionality

This isn’t about throwing data away. It’s about extracting signal early, while the system still has control.

When logs are distilled at the edge, close to where they’re generated, cardinality becomes manageable, costs become predictable, and observability shifts from data collection to system understanding.

From Cardinality to Health, Stress, and Hotspots

The real goal isn’t to tame cardinality for its own sake. It’s to understand the state of the system. When raw logs are distilled early, observability shifts from tracking millions of dimensions to monitoring health, stress, and emerging hotspots. Instead of reacting to cost spikes or drowning in log noise, teams can see when services are degrading, where pressure is building, and which behaviors are changing before they become incidents.

Dstl8 applies this distillation layer at the edge, transforming high-cardinality logs into concise, correlated signals that reflect how systems are actually behaving. The result isn’t just lower Datadog spend — it’s a clearer, more controllable view of system health that scales with complexity. Cardinality becomes a symptom, not the problem, and observability becomes a tool for understanding rather than storage.

Learn about more Datadog optimizations here, including tail sampling traces and boosting AI SRE tools.

Table of Contents

Surface Unknown Unknowns Automatically

Catch emergent patterns from AI-generated code in staging—before they become production incidents.

Learn About Dstl8press@controltheory.com

Back

Back